Amazon Q

AWS announces Amazon Q, a new generative AI–powered assistant that is specifically designed for work and can be tailored to your business to have conversations, solve problems, generate content, and take actions using the data and expertise found in your company’s information repositories, code, and enterprise systems. Amazon Q has a broad base of general knowledge and domain-specific expertise.

Next-Generation AWS Chips: Powering AI Models

Introduction of AWS Trainium2 and Graviton4 chips, marking a notable leap forward in the realms of AI model training and inferencing. Trainium2, with its enhanced performance and energy efficiency, emerges as a revolutionary option for aspiring developers aiming to expedite their model training without incurring substantial costs. This development signals a significant stride in empowering junior developers with advanced tools for efficient and cost-effective model training.

Amazon Bedrock: New Capabilities

Amazon Bedrock introduces groundbreaking innovations, expanding model options and delivering robust capabilities to streamline the development and scaling of custom generative artificial intelligence (AI) applications. As a fully managed service, Bedrock provides effortless access to a range of leading large language and foundation models from AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon. These enhancements democratize generative AI by offering customers a broader selection of industry-leading models, simplified customization with proprietary data, automated task execution tools, and responsible application deployment safeguards. This evolution transforms how organizations, irrespective of size or industry, leverage generative AI to drive innovation and redefine customer experiences.

Amazon SageMaker Capabilities

Announced five new capabilities for Amazon SageMaker, our fully managed service that brings together a broad set of tools to enable high-performance, low-cost machine learning for any use case.

SageMaker HyperPod: Boosting model training efficiency by up to 40%, this enhancement enables customers to effortlessly distribute training workloads across hundreds or thousands of accelerators. This parallel processing capability significantly improves model performance by automating workload division, thereby expediting the overall model training time.

SageMaker Inference: Enabling customers to deploy multiple models on a single AWS instance (virtual server), this feature optimizes the utilization of underlying accelerators, resulting in reduced deployment costs and latency.

SageMaker Clarify: Assisting customers in assessing, contrasting, and choosing optimal models tailored to their specific use cases, this feature promotes responsible AI usage by aligning with their selected parameters.

SageMaker Canvas Enhancements: Two new launches (Prepare data using natural-language instructions and Leverage models for business analysis at scale) in Canvas make it easier and faster for customers to integrate generative AI into their workflows.

Amazon Q in Connect

Amazon Q in Connect enhances customer service agent responsiveness by providing suggested responses, recommended actions, and links to pertinent articles based on real-time customer interactions. Additionally, contact center administrators can now create more intelligent chatbots for self-service experiences by articulating their objectives in plain language.

AWS Serverless Innovations

Building on the legacy of AWS services since the launch of the first service, Amazon S3, three new serverless innovations for Amazon Aurora, Amazon Redshift, and Amazon ElastiCache have been introduced. These additions are designed to assist customers in analyzing and managing data at any scale, significantly streamlining their operations.

Four New Capabilities for AWS Supply Chain

Expanding upon the launch of AWS Supply Chain during the previous re:Invent, this year introduces four additional capabilities to the service—supply planning, collaboration, sustainability, and the integration of a generative artificial intelligence (generative AI) assistant known as Amazon Q. AWS Supply Chain is a cloud-based application leveraging Amazon’s extensive 30 years of supply chain expertise to offer businesses across industries a comprehensive, real-time view of their supply chain data. The enhanced capabilities encompass assisting customers in forecasting, optimizing product replenishment to minimize inventory costs and enhance responsiveness to demand, and facilitating seamless communication with suppliers. Moreover, the platform simplifies the process of requesting, collecting, and auditing sustainability data, aligning with the growing focus on environmental responsibility. The innovative generative AI feature delivers a condensed overview of critical risks related to inventory levels and demand fluctuations, providing visualizations of tradeoffs within different scenarios.

Amazon One Enterprise

Amazon One Enterprise offers a convenient solution to streamline access to physical locations. This novel palm recognition identity service empowers organizations to grant authorized users, including employees, swift and contactless entry to various spaces such as offices, data centers, hotels, resorts, and educational institutions—simply through a quick palm scan. Beyond physical locations, the technology extends its utility to providing access to secure software assets like financial data or HR records. Learn more about how Amazon One Enterprise works, and how it’s designed to improve security, prevent breaches, and reduce costs, all while protecting people’s personal data.

Amazon S3 Express One Zone

Amazon Simple Storage Service (Amazon S3) stands out as one of the most widely utilized cloud object storage services, boasting an impressive repository of over 350 trillion data objects and handling more than 100 million data requests per second on average. In a groundbreaking move, Amazon has introduced Amazon S3 Express One Zone, a specialized storage class within Amazon S3 tailored to deliver data access speeds that are up to 10 times faster. Notably, this new offering also comes with the added benefit of request costs that are up to 50% lower compared to the standard Amazon S3. The motivation behind this innovation is to cater to customers with applications that demand exceptionally low latency, emphasizing the need for rapid data access to optimize efficiency. A prime example includes applications in the realms of machine learning and generative artificial intelligence (generative AI), where processing millions of images and lines of text within minutes is a common requirement.

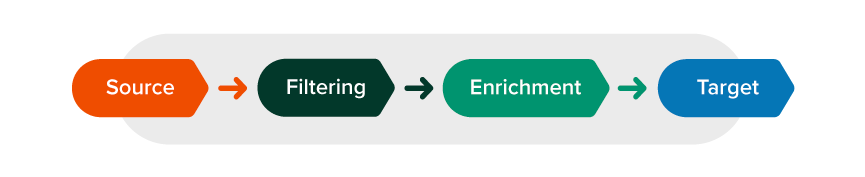

4 New Integrations for a Zero-ETL Future

Enabling customers to seamlessly access and analyze data from various sources without the hassle of managing custom data pipelines, Amazon Web Services (AWS) introduces four new integrations for its existing offerings. Traditionally, connecting diverse data sources to uncover insights required the arduous process of “extract, transform, and load” (ETL), often involving manual and time-consuming efforts. These integrations mark a strategic move towards realizing a “zero ETL future,” streamlining the process for customers to effortlessly place their data where it’s needed. The focus is on facilitating the integration of data from across the entire system, empowering customers to unearth new insights, accelerate innovation, and make informed, data-driven decisions with greater ease.

AWS Management Console for Applications

AWS announces the general availability of myApplications, an intuitive addition to the AWS Management Console for streamlined application management. This feature provides a unified view of application metrics, encompassing cost, health, security, and performance. Users can effortlessly create and monitor applications, addressing operational issues promptly. The application dashboard offers one-click access to corresponding AWS services like AWS Cost Explorer, AWS Security Hub, and Amazon CloudWatch Application Signals. Introducing application operations, myApplications simplifies AWS resource organization through automatic application tagging, enhancing efficiency in application deployment and management. Accessible in all AWS Regions with Resource Explorer, myApplications facilitates swift, scalable operations via the AWS Management Console or various coding solutions.

Schedule a meeting with our AWS cloud solution experts and accelerate your cloud journey with Idexcel.