Based on the current trends and advancements in the technology industry, in addition to several other factors, we made certain predictions about new services / features that were likely to be launched at the AWS re:Invent 2021 annual conference. The table below presents a wrap-up of all our predictions about the event in comparison with the actual announcements made by AWS:

|

S.No |

Idexcel’s Predictions |

AWS re:Invent 2021 Announcements |

|---|---|---|

| 1 |

Release of new generation ec2 instances for faster Machine Learning Training and Inference, which will offer a better Price Performance Ratio. |

AWS announced 3 new Amazon EC2 instances powered by AWS-designed chips. They are as follows: (i) Amazon EC2 C7g instances powered by new AWS Graviton3 processors that provide up to 25% better performance for compute-intensive workloads over current generation C6g instances powered by AWS Graviton2 processors. (ii) Amazon EC2 Trn1 instances powered by AWS Trainium chips which provide the best price performance and the fastest time to train most Machine Learning models in Amazon EC2. |

| 2 |

Amazon Textract will soon penetrate the market by providing extraction solutions that are domain specific, covering specific types of document extraction solutions. We may see examples of specific types of documents that will be extracted. |

Amazon Textract had announced specialized support for automated processing of identity documents. Users can now swiftly and accurately extract information from IDs (eg. U.S. Driver Licenses & Passports) which have varying templates or formats. |

| 3 |

Improvements in Lex are likely to be out later this year or early next year, with the recent acquisition of Wickr. |

AWS announced the Amazon Lex Automated Chat Bot Designer (in Preview), a new feature that simplifies the process of chatbot training and design by bringing in a level of automation to it. |

| 4 |

A range of Automation options within AWS Service are likely to be announced. |

Amazon SageMaker Inference Recommender – A new capability of SageMaker introduced at AWS re:Invent 2021, which lets users choose the best available compute instance and configuration to deploy machine learning models for optimal inference performance and cost. Also, it minimizes the time taken to obtain Machine Learning (ML) models in production by automating performance benchmarking and load testing models across SageMaker ML instances. Users can now utilize Inference Recommender to deploy their model to a real-time inference endpoint that delivers the finest performance at a meanest cost. |

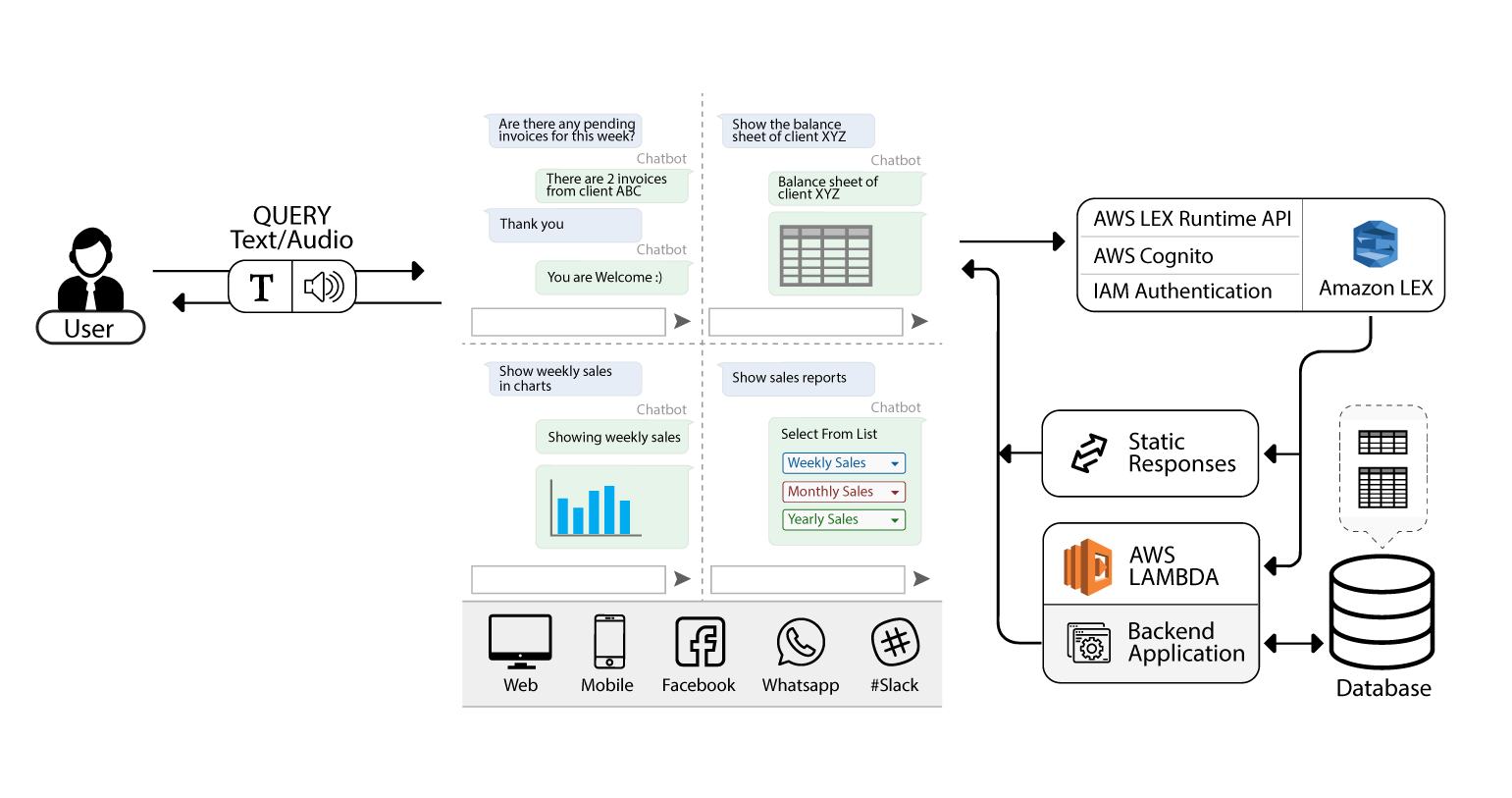

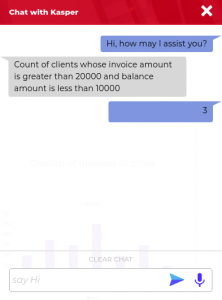

There are instances where users might want to know about a sum or count or any other single value response. For example, an inquiry might be “count the number of clients whose due date is within 2 weeks” or “sum of the invoice amount of all clients“. The responses of these queries will be just a single value eg. “10,000”.

There are instances where users might want to know about a sum or count or any other single value response. For example, an inquiry might be “count the number of clients whose due date is within 2 weeks” or “sum of the invoice amount of all clients“. The responses of these queries will be just a single value eg. “10,000”.

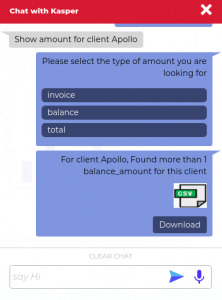

The third type of response are response cards – a response to clarify the intention of the user. Suppose the user asks an ambiguous question like this “what is the amount of Apollo Inc. “. The chatbot will find the query to be missing some keywords because the user did not specify the type of amount (either invoice amount or balance amount). Kasper then prompts back with a list of possible options, so the user can select the appropriate option and receive the accurate result.

The third type of response are response cards – a response to clarify the intention of the user. Suppose the user asks an ambiguous question like this “what is the amount of Apollo Inc. “. The chatbot will find the query to be missing some keywords because the user did not specify the type of amount (either invoice amount or balance amount). Kasper then prompts back with a list of possible options, so the user can select the appropriate option and receive the accurate result.